Overview

This project demonstrates a full machine learning operations (MLOps) pipeline that handles vehicle insurance data from data ingestion to model deployment. The goal is to build a scalable, automated system that can manage real-world ML workflows using modern tools and cloud services. It integrates data processing, model training, validation, and CI/CD to simulate a real production environment.

My Role & Contributions

- Designed and implemented the complete MLOps workflow.

- Built data ingestion, validation, and transformation modules using Python.

- Trained a machine learning model and deployed it using Docker and AWS EC2.

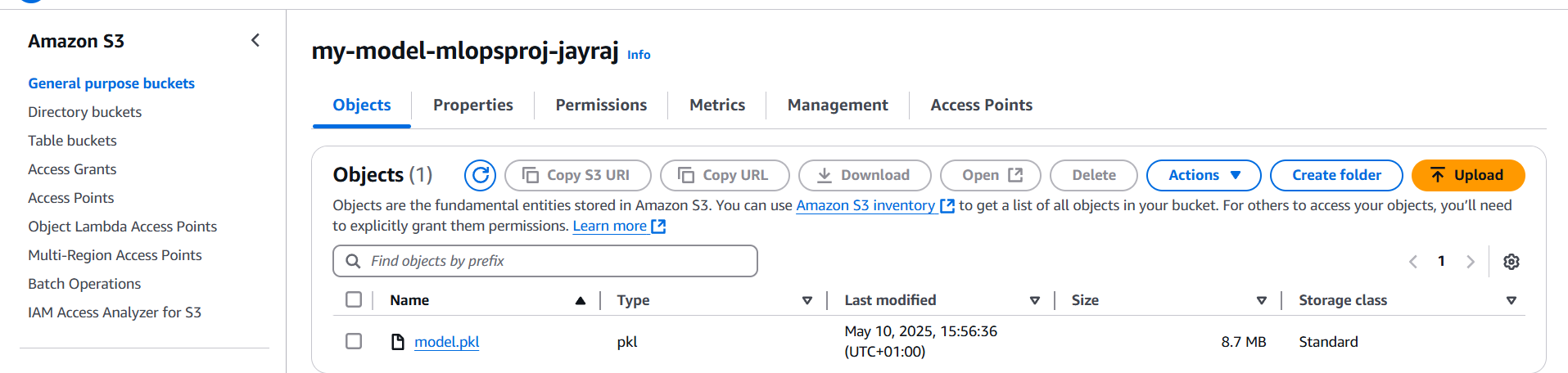

- Set up MongoDB for data storage and AWS S3 for model versioning.

- Automated deployment using GitHub Actions and Docker containers.

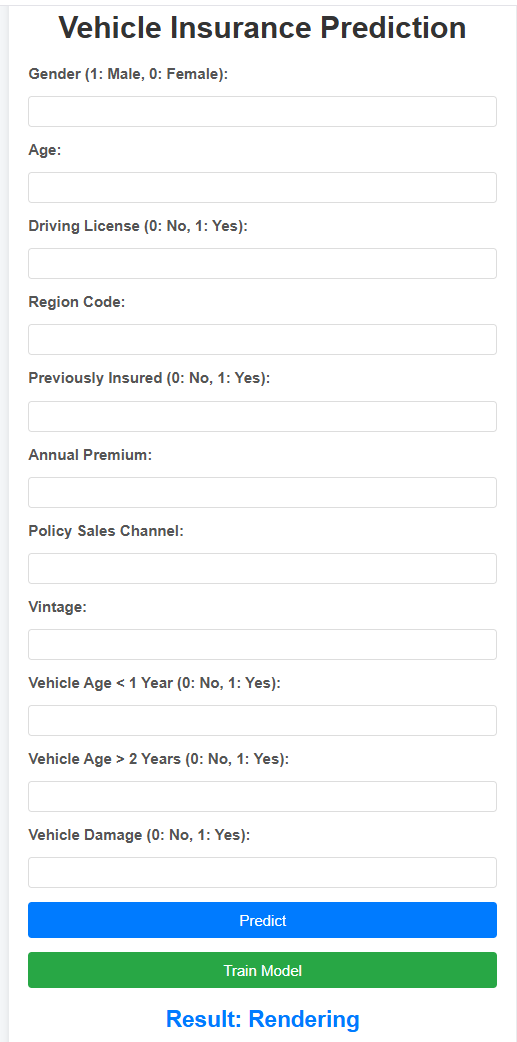

- Developed a Fastapi-based API for predictions and created a minimal web UI.

Tech Stack

Implementation Details

- Project Initialization: Started with a modular folder structure using a custom template script.

- Data Ingestion: Pulled structured data from MongoDB using secure connection functions.

- Data Processing: Validated input using a schema file and transformed it for model readiness.

- Model Development: Trained models using custom estimators and encapsulated logic for reusability.

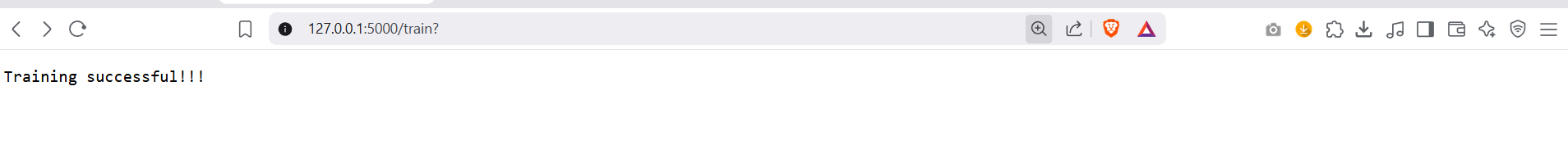

- Deployment: Packaged the entire pipeline into a Docker container, deployed it on an EC2 instance, and automated updates using GitHub Actions.

- User Interface: Built a simple frontend with Flask, HTML templates, and static files for predictions.

Results & Impact

- Successfully simulated an end-to-end production-grade ML pipeline.

- Gained hands-on experience with MLOps tools, cloud platforms, and automation best practices.

- Improved understanding of model versioning, reproducibility, and deployment workflows.

- The project serves as a comprehensive showcase of my ability to build scalable ML systems, making it a key highlight of my portfolio for recruiters and employers.