Overview

This system summarizes chat-like texts using a fine-tuned PEGASUS model on the SAMSum dataset. It demonstrates a complete machine learning lifecycle: data curation, training, version control, CI/CD deployment, and inference APIs.

My Role & Contributions

- Data & Schema: Chose SAMSum, defined I/O schema.

- Modeling: Fine-tuned PEGASUS, evaluated via ROUGE.

- Pipelines: Modular ML pipelines with config-driven architecture.

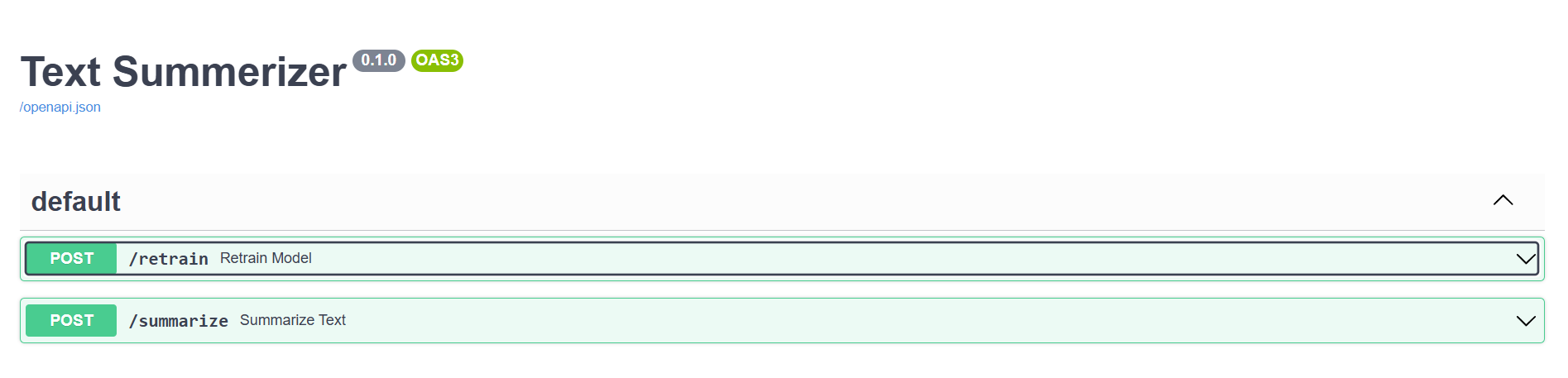

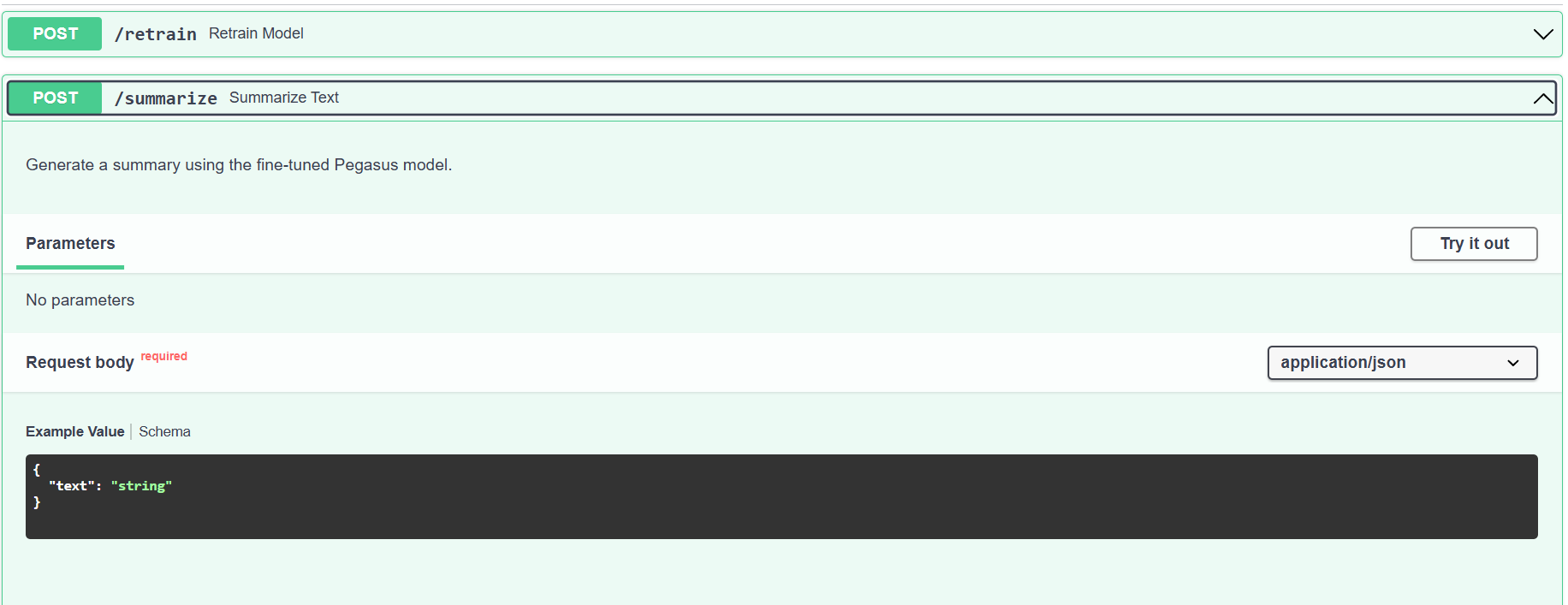

- Deployment: FastAPI app, Dockerized, CI/CD with GitHub Actions + AWS EC2.

- Monitoring: Logging, inference endpoint with live request handling.

Tech Stack

Python

FastAPI

Docker

AWS EC2

GitHub Actions

Transformers

Tokenizers

Matplotlib

Implementation Details

- Modular Structure: Separated components into

components/,pipeline/,config/. - Config-Driven: Reproducible via

config.yaml+params.yaml. - Inference Server: FastAPI

/predictendpoint with JSON input/output. - Docker & CI/CD: GitHub Actions, AWS ECR, auto-deployed to EC2.

Results & Impact

- Achieved ROUGE-1 F1 ≈ 45 on SAMSum summaries.

- Reduced verbose conversations by 60–70% while retaining meaning.

- CI/CD reduced deployment from hours to minutes.

- Scalable architecture ready for containerized environments.